So I have a new role at work … (wow, non-sequitur much?)

Ok, well, that was a rough start. What I meant to say is that I have a new role at work. This requires that I work with some of our larger software, and so I have built a large VMWare image with a 10GB RAM profile, stealing most of the RAM in my 16GB RAM machine. So of course I upgraded half of it to 8GB sticks giving me 24GB in total and some much needed relief. I will upgrade the rest later …

You are wondering by now when I am getting to the test … a few moments more. One thing that has grown tiresome over time is the context switching between the laptop (which I keep open on one monitor through remote desktop), my main machine for research (which I do a lot of when I am working as I choose to look up issues and questions rather than trying to write down every detail) and the virtual machine.

So I need a third (and eventually a fourth) monitor. This would allow me to arrange each of the contexts on a separate monitor and thus I would be able to work without constantly flipping windows. It is an efficient way of working and is essentially irritation free.

Except that my GTX570 will not support more than 2 monitors, despite having many extra connectors on the back. But the newer GTX650ti or GTX660 will do that just fine. And they have twice as many cores, so my Sony Movie Studio rendering times should shrink, yes?

No. In fact, the CUDA architecture starting at the 600 series cards is called Kepler. The architecture in the 400 and 500 series was Fermi. And guess what … Fermi is much faster for compute operations, although Kepler is much faster for pure gaming. So just swapping out a 600 series for my 500 series is not obvious … yet there are reports that some people have got rendering times that are similar. And I thought … maybe the 660 would work as well, or maybe GPU rendering is not such a big deal in Movie Studio anyway … after all, it’s not Vegas Pro.

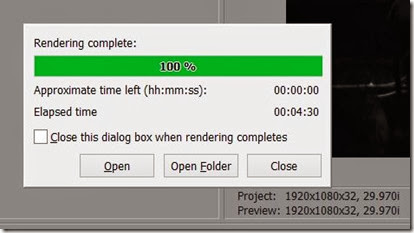

Well, in fact the GTX570 speeds things up quite a bit. I tested it be re-rending Nick’s cover of “I Killed the Monster” in each mode and the times were pretty dramatically different. I used identical settings in each case and even used fairly light weight settings like 720p. Still a big difference …

Now, part of this is the fact that the file is much larger for the CPU because it rendered a slightly softer interpretation of the shadows, bringing in a lot of extra shadow detail that it had to process. And the file it produced is significantly larger, despite the actual detail being essentially identical to the eye.

CPU vs GPU

Also interestingly, I prefer the slightly more contrasty look that the GPU rendering chose and I prefer it. So 30% shorter rendering times and 18% smaller files with a nicer contrast (at least in this case) and no discernable difference in motion or detail (again, for this example) makes me think that GPU rendering matters a lot to me.

Which means that jumping to a Kepler card should probably wait for a while.

So how so I get that extra monitor if I don’t want to grab a card that supports the extra card? Well, I have a few choices. One is to try adding one of my older video cards into the box. For example, I have an older GTX9600 … which even has some CUDA cores to add to the party. So I may give that a shot. (Note: I already tried it once but screwed something else up and it would not boot, but it turned out to not be the card’s fault as it continued after I removed it. I’ve fixed that so I plan to try again.)

If worst comes to worst then I can always just buy a cheap USB video card to get that third monitor for now. There are many options … but for those who use Movie Studio or Vegas Pro, the GPU rendering definitely beats CPU rendering, at least for my CPU.

My CPU is the 8 core AMD8150, which is running at the stock 3.6ghz (turbo 4.2ghz.) It is a bit slower to render in the Adobe Premiere video benchmarks on Tom’s Hardware than the 2700K Sandy Bridge, but is in the ball park. The extra cores help of course. The ratio of time to the fastest processor on the planet at this time (i7-3930K 6 cores / 12 threads) is 252/173 or about a 29% difference. Since my GTX570 already gives me more of a difference, I think I’ll just stick with that.

Anyway … for anyone out there who desires fast rendering, the GTX570 and 580 cards are a good bargain right now. Were I do jump to a Kepler card, I think the lowest I would consider is the GTC660ti. That has enough cores to overcome the lack of agility in the Kepler architecture for rendering. YMMV of course …